Contents

- 1 What is Edge Computing?

- 2 Why does edge computing matter?

- 3 How does edge computing work?

- 4 Why is there a need for edge computing?

- 5 What are the major benefits of edge computing?

- 6 Edge computing: Things to consider

- 7 How is edge computing implemented?

- 8 What are some of the challenges of edge computing?

- 9 Edge vs. cloud vs. fog computing

- 10 Edge computing, IoT and 5G possibilities

- 11 Harnessing the potential of devices at the edge

- 12 How is edge computing different from other computing models?

- 13 Here are some edge computing use cases and examples

- 14 Ambitions for 2022

- 15 Want to know more?

What is Edge Computing?

Edge computing is a computer paradigm that allows computation to take place close to or at the data source. This is in contrast to the usual approach of using the cloud at the data centre as the sole place for computing. This does not imply that the cloud will vanish. It just indicates that the cloud is approaching you.

Edge computing improves the performance of online applications by bringing processing closer to the data source. The definition of the word “edge” in this context is literal geographical dispersion. This eliminates the need for long-distance connections between clients and servers, lowering latency and bandwidth consumption. Edge computing improves Internet devices and online applications by bringing processing closer to the data source.

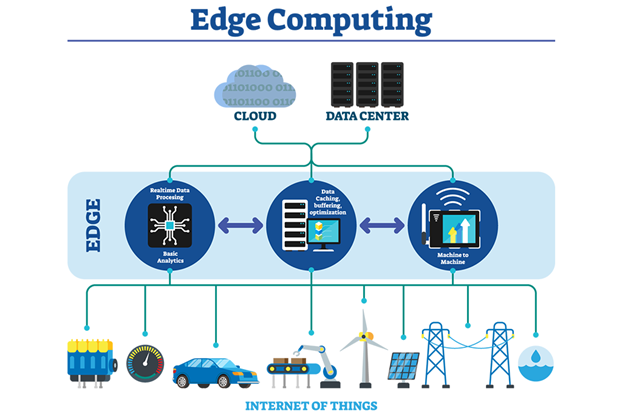

Source: Edge Computing

Why does edge computing matter?

Consider the following scenario: Your alarm clock goes off while you’re sleeping in your temperature-controlled bed, causing your drapes to separate, allowing just enough sunlight to gently wake you up. You hear cheerful music playing via your smart speaker as you get out of bed. Sensors in your floor alert your coffee machine that you’ve gotten out of bed, and it starts brewing. The music is replaced by a narration of the morning’s news headlines as you approach the kitchen, where a pot of freshly prepared coffee greets you.

The notion of the Internet of Things (IoT) has been around for a while. A network of interconnected devices that communicate with one another via software, sensors, and other technologies. In sectors including healthcare, transportation, communication, manufacturing, and, of course, smart homes, the network has spawned a slew of ground-breaking applications. There were 620 distinct publicly-recorded IoT platforms in 2016, up from only 260 platforms in 2015. Amazon and Microsoft are among the behemoths fighting for a piece of the ever-growing IoT platform pie. According to estimates, worldwide investment in IoT will reach a staggering 1.1 trillion dollars by 2023. This may be explained by organizations’ increased attention to security as well as the need to improve efficiency and save operating expenses. Smart homes and smart cities are also being viewed as worthwhile investments in technology.

However, there is a bottleneck in the development of IoT and other such technologies that will usher us all into the future. This bottleneck manifests itself in the processing of all data generated by IoT devices (among other sources), which clogs up today’s centralized networks. Edge computing is a term used to describe a type of computing that takes place. Edge Computing is the magic spell that will bring us closer to that wonderful, automatically-brewed pot of coffee in the morning, with improvements in efficiency, latency, bandwidth usage, and network congestion. Not to mention the ramifications for industries in terms of security, efficiency, and cost-cutting.

How does edge computing work?

Our lives have been entirely taken over by what we’ve come to know as cloud computing services, whether it’s email services like Gmail or online picture storage services like iCloud. Even major corporations have begun to migrate their essential applications to the cloud, including data from thousands of sensors installed within their production units, in order to gain rapid insights and the capacity to remotely monitor their equipment.

However, as these Internet of Things sensors gain traction and fuel rapid data growth, they place enormous strain on central cloud servers and begin to suffocate network capacity.

To address these issues, edge computing brings computers closer to these IoT devices. You may think of them as a way to process your video modifications on your iPhone rather than transmitting them to a central server. Furthermore, computing demands are migrating closer to where the device is or where the data is consumed, necessitating data processing to move closer to the devices as well.

To create a cloud edge, operators construct multiple edge data centres rather than a single cloud data centre. Cloud edge enables numerous new applications by bringing computation closer to the devices. Among these are autonomous driving, high-end cloud gaming, and remote 3D modeling, to name a few. Each of these applications necessitates extremely low latency, which brings computation closer to the edge, making edge networks the preferred architecture.

Edge computing appears to be the ideal answer since it processes data and even analytics at or near the original data source, lowering latency, lowering bandwidth costs, and making the edge network less prone to a single point of failure.

Analysts predict that by 2025, there will be 30.9 billion IoT devices on the market, up from 13.8 billion in 2021. Increases in IoT devices will place enormous strain on cloud data centres, necessitating the use of edge IoT to keep up with the demand.

Why is there a need for edge computing?

The growth of linked devices such as smartphones, tablets, and gadgets, as well as the recent surge in online content consumption, may eventually overwhelm today’s centralized networks. According to the International Data Centre (IDC), there will be 55.7 billion connected devices worldwide by 2025, with 75% of them connected to an IoT platform. According to IDC forecasts, linked IoT devices would create up to 73.1 ZB data by 2025. (up from 18.3 ZB in 2019). While video surveillance and security will account for the majority of this data, industrial IoT applications will also contribute significantly, increasing the need for cloud edge and edge IoT.

With such a surge in data, today’s centralized networks may eventually be swamped with traffic. Edge computing attempts to address the impending data surge with a dispersed IT design that moves data centre investments onto the network periphery.

What are the major benefits of edge computing?

Edge computing can help with latency for time-sensitive applications, IoT efficiency in low bandwidth situations, and overall network congestion in order to solve network challenges.

Latency

The time-to-action is decreased when data processing takes place locally rather than at a faraway data centre or cloud because of the physical closeness. Because data processing and storage will take place at or near edge devices, IoT and mobile endpoints will respond to vital information in near real time.

Congestion:

Edge computing will assist the wide-area network in coping with the increased traffic. By decreasing the amount of bandwidth we use, we will be able to save time and money. This is a significant barrier in the age of mobile computing and the Internet of Things. Rather than overwhelming the network with relatively unimportant raw data, edge devices will process, filter, and compress data locally.

Bandwidth:

The edge computing topology will enable IoT devices in situations where a network connection is unstable. Examples of such environments are offshore oil rigs, distant power facilities, and remote military installations. Even if the cloud connection is irregular, local compute and storage resources will enable ongoing operation.

Edge computing: Things to consider

Moving to edge computing may seem an exciting idea, but preparing for the same is a challenging exercise. We need to build a comprehensive strategy to ensure sound deployment of the edge. Here’s a list of things one must consider while choosing and implementing edge computing:

- The rationale behind the strategy: Understanding the need for edge computing for the organization is the first vital element we have to focus on. It is important to clearly define the technical and business problems that the organization is trying to solve.

- Comparison of hardware and software options: As we move closer to the implementation phase, we need to choose from a large pool of vendors to build our edge network. Each option has to be evaluated in terms of cost, performance, interoperability, features, and support.

- Extensive monitoring: Edge architecture should be such that it enables comprehensive monitoring. Monitoring tools should provide an overview of deployment, configuration, and security and cover metrics such as uptime, storage capacity, and utilization.

- Maintenance requirements: For successful implementation, we need to look at all aspects ranging from security, connectivity, and management to physical maintenance.

How is edge computing implemented?

Implementing edge computing is a detailed step by step process. We need to follow the following steps in order to ensure systematic implementation of edge computing solutions.

- Identify areas of concern: We need to identify pain points and areas of operations that will specifically benefit from the implementation of edge computing.

- Plan for migration: Recruiting OT and IT staff to aid in the development of a plan for shifting to the edge. In this step, we identify areas where implementing edge computing will reap benefits.

- Build design specifications: Application cycles, lags, turnaround times, and required response time form the basis of the program specifications.

- Specify role of local/edge devices: In this stage, we will specify what data will be handled by edge devices, where it will be stored, and what will be reported to the cloud and administrative platforms.

- Research on edge enabling device: From the large pool of edge components and devices, we need to decide on the ones that will suit our project requirements.

- Incorporate cyber security capabilities: For a safe and secure environment, cyber security capabilities have to be incorporated at each of the edge computing networks.

What are some of the challenges of edge computing?

Edge computing brings data processing capabilities closer to the source. It enables a large number of use cases, but this is not void of challenges. Some of the challenges that we may face in our edge computing journey are:

- Network bandwidth: Usually higher bandwidths are required at central data centres and lower at the end points. But edge computing requires more bandwidth across all individual ends of the network.

- Distributed computing: Edge servers need to be considered as an additional aspect by business servers while computing. This is because modules are placed at certain distances in most servers, but edge computing brings all systems closer to the computational areas.

- Latency: Both way computation and distributive computing can cause latency issues to occur.

- Security: Every IoT device is a potential vulnerable spot in the network. So, it is important to emphasize proper device management and security of storage and computing resources.

- Data accumulation: Collecting and storing large volumes of data at edge servers is a challenge in terms of security. Also, one must decide what data to keep and what to delete after analysis.

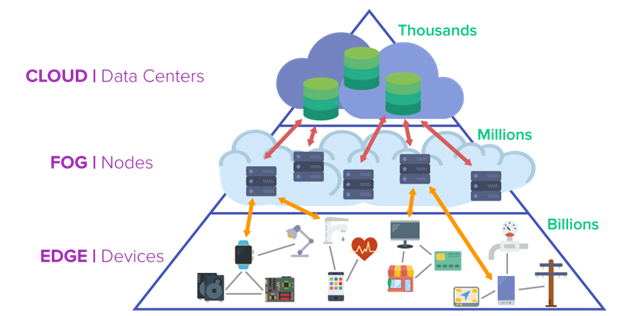

Edge vs. cloud vs. fog computing

Edge computing, cloud computing, and fog computing are terms that are often used interchangeably. Even though all three of them focus on distributed computing, they aren’t the same.

The key difference between the three lies in the location of data processing. In cloud computing, the processing takes place on the cloud server; in edge computing, it takes place on the IoT sensors that are connected to the devices; whereas in fog computing, the processing takes place between the data source and the cloud or any other data center.

Cloud computing serves the purpose of long term in-depth analysis of data and storage, whereas edge and fog computing work better for quick analysis, which is required for real-time responses.

Source: Edge vs. Cloud vs. Fog

Edge computing, IoT and 5G possibilities

The emergence of IoT devices brought edge computing to everyone’s notice. The evolution of IoT devices is expected to have a significant impact on future developments in edge computing.

Estimates show that by 2028, Edge services will be available globally. Today, the use of edge is situation specific but this is likely to change. Edge is going to change the way we use the internet, bringing more possible use cases of the technology into the picture.

5 G’s launch will also impact the world of edge computing. It will allow us to explore edge deployments, enabling automation and virtualization capabilities. This will include things like better vehicle autonomy and workload migrations to the edge.

Harnessing the potential of devices at the edge

Real-time deep insights and predictive analytics are possible by harnessing the growing in-device computing capability with edge computing. Utilizing this potential will help us to improve quality and enhance value through innovations.

Edge computing solutions allow us to increase the speed of digital services, reduce latency, and save costs while ensuring that data processing is in full compliance with local regulations.

Devices at the edge allow us to bring data closer to their point of use than to a central location. Latency and costs are significantly lowered with a reduction in the amount of data being processed in the cloud or a centralized location. At the same time, privacy and security concerns are addressed by having data on an edge device rather than having it move back and forth from the cloud or a central data center.

How is edge computing different from other computing models?

There are a large number of computing models available. Some of them are:

- Distributed computing: Multiple computer systems work on solving one problem. In this, all computer systems are connected, and the problem is subdivided so that each part is solved by different systems.

- Parallel computing: It involves the simultaneous use of multiple computers. The problem is subdivided and then broken into instructions. Different processors simultaneously implement these instructions.

- Cluster computing: Two or more computers work in tandem to perform tasks as a single machine.

- Fog computing: It is the computational structure between the cloud or a central data center and the data producing source.

- Cloud computing: : It is a method of computing that involves the delivery of servers, databases, storage, networks, analytics, and intelligence over the internet.

Here are some edge computing use cases and examples

A range of applications, products, and services have the potential to function better by incorporating edge computing. Among the use cases are the following:

- IoT devices: Smart devices can provide real-time insights by running on device code rather than on the cloud.

- Self driving cars: To ensure road safety, autonomous cars need to make decisions in real time without having to wait for instructions from a server.

- Medical monitoring devices: With lives at stake, instant updates are needed without even a second of delay.

- Retail: Business opportunities can be identified using surveillance, stock trading, sales data, and other real-time business updates.

- Manufacturing: Edge computing allows the use of technologies like environmental sensors. It also enables the user to monitor and utilize analytics to find production errors and improve product quality.

Ambitions for 2022

STL aims to deliver multi-access edge applications and a multi-access convergent platform for wireless and wireline edge computing.

This, of course, is in line with STL’s greater goal of enabling major enterprises, citizen networks, cloud firms, and telecommunications to offer cutting-edge experiences to their customers. Our company’s objective is to use technology to build next-generation connected experiences that will transform people’s lives.

Want to know more?

1. What is edge computing used for?

Edge computing makes communication faster. It works on the principle that information processing should happen as close as possible to the end user. So instead of running processes in the cloud, it occurs on the user’s device or local network. IoT is the primary area where edge computing is most beneficial. You can have a collection of entities, each having processing power and connections to the others. Communication between these devices needs some form of processing. There will be high latency if these devices must wait for the data from the cloud. So IoT edge computing devices are necessary.

2. What is “edge” vs. “cloud”?

Edge computing has faster communication and produces less latency. That is because data does not need to travel far. However, cloud computing can process more complicated and large amounts of data. In cloud computing, all the data gets processed on centralized servers and then sent to the user. But this process introduces latency. In edge computing, the processing happens at the network edge. The network edge could be the user’s device, an IoT network, a local network, or even the ISP.

3. Is a smartphone an edge device?

All devices that have processing capacity and the ability to communicate with the internet can be edge devices. So a smartphone can be an edge device, as it has processing capability. The processes may even happen offline, and the cloud can update whenever the user’s device connects to the internet. That is useful in developing countries where the Internet is not robust. Many financial startups use such systems for implementing payments.

4. Will edge computing replace cloud computing?

Edge computing technology won’t replace cloud computing entirely. That is because edge computing needs hardware with processing capabilities. Although you can make a lot of hardware with embedded processors, like smartphones, they won’t be able to handle compute-intensive tasks. So edge computing has a high implementation cost. Additionally, edge computing can only process small chunks of data. That means industries may lose crucial information. Finally, malicious users can hack into the devices as these have lower security than the cloud. Because of these edge computing challenges, edge and cloud computing will co-exist.

5. What is the network edge?

In a network, there is the edge, and there is the core. The network edge can be anything that is closer to the end user. It could be their device or the IoT device they use. An ISP or a local network can also be a network edge. The most crucial part is that the processes don’t happen in the central cloud. That enables fast and low latency communication. A central cloud is the core of the network. They provide services to the components on the network edge.

6. Is edge computing the future?

The benefits of edge computing are huge, especially where you need numerous devices to perform less compute-intensive tasks. For example, the devices in an IoT network will benefit from edge computing. They don’t need many computational resources but need fast and low latency communication. Therefore, we can say that edge computing will be an integral part of the future.